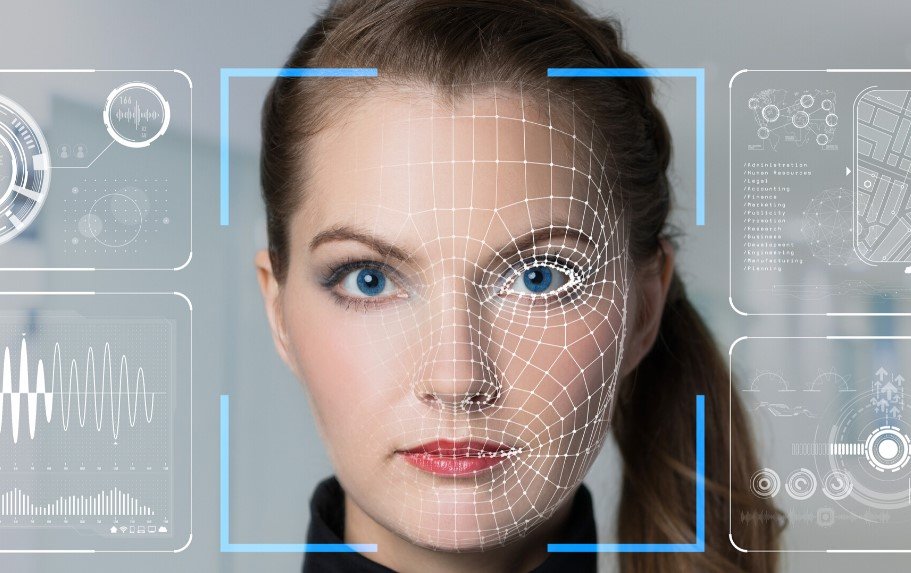

A Rotherham man named Craig Hadley faced a shocking ordeal when AI facial recognition software at a Sports Direct store mistakenly identified him as a fraudster, leading to his removal from the shop. The incident, which happened recently at the Parkgate Shopping Centre, highlights growing concerns about errors in AI technology used for security in retail settings.

The Incident Unfolds

Craig Hadley walked into the Sports Direct store expecting a routine shopping trip. Instead, staff confronted him based on an alert from Facewatch, the AI software the company uses to spot potential thieves.

He described the moment as confusing at first, thinking it might be a prank. But things turned serious when employees accused him of fraud and escorted him out in front of other customers.

The mix-up stemmed from a previous fraud case where another man, who looked similar with a bald head and beard, had tricked the store. Staff had flagged Hadley weeks earlier, but the AI system amplified the error by matching his face to the wrong person.

Hadley later proved his innocence by providing identification and allowing a manual check of CCTV footage. Facewatch then removed his image from their database.

This event left him feeling anxious for weeks, worried that his photo might spread to other stores and cause more trouble.

Broader Issues with AI Facial Recognition

AI tools like Facewatch aim to curb shoplifting by scanning faces against watchlists of known offenders. However, mistakes happen often, especially when people share similar features.

Experts point out that facial recognition technology can struggle with variations in lighting, angles, or even simple resemblances. In Hadley’s case, the fraudster was at the till around the same time, adding to the confusion.

Civil liberties groups warn that such systems create secret blacklists without proper oversight. People end up flagged without evidence or a chance to defend themselves.

Recent studies show error rates in facial recognition can reach up to 20 percent in real-world settings, depending on the software and conditions. This has led to calls for stricter regulations.

Other incidents include a woman in Manchester wrongly accused of stealing items due to a similar AI glitch. These cases show how everyday shoppers can suffer from tech flaws.

Sports Direct’s Response and Apology

Sports Direct quickly admitted the error and apologized to Hadley. They called it a genuine mistake and promised a full investigation.

The company also committed to extra training for staff to handle AI alerts better. This step aims to prevent future mix-ups.

Hadley accepted that human error played a role but stressed the need for better safeguards. He noted that being added to a national database without cause could harm anyone’s reputation.

Facewatch emphasized that avoiding false positives is a top priority. They work to refine their algorithms, but challenges remain in balancing security with accuracy.

Similar Cases and Growing Concerns

Around the world, AI facial recognition has led to wrongful accusations. In the United States, several men were arrested based on faulty matches, only to be cleared later.

Here in the UK, police use similar tech for crowd surveillance, but errors have sparked debates about privacy and fairness.

Advocates argue for transparency, like requiring companies to notify people if they get flagged and offering easy ways to appeal.

- Key risks of AI facial recognition in retail:

- High error rates for people with similar looks

- Potential for bias against certain ethnic groups

- Lack of immediate appeals process

These points underline why many push for laws to govern how stores deploy such systems.

Impact on Shoppers and Future Outlook

For Hadley, the experience was humiliating and stressful. He vows never to return to Sports Direct, fearing more issues.

It also raised his awareness of how AI invades daily life without much regulation. He now wonders about its use in other places like airports or events.

Looking ahead, experts predict more incidents unless improvements happen. Governments are considering new rules to ensure AI tools are tested rigorously before wide use.

| Aspect | Current Challenge | Proposed Solution |

|---|---|---|

| Accuracy | Up to 20% error rate in varied conditions | Advanced training data and regular audits |

| Privacy | Secret watchlists without consent | Mandatory notifications and appeal rights |

| Bias | Higher mistakes for diverse groups | Inclusive algorithm development |

| Oversight | Limited regulations in retail | Stricter laws and independent reviews |

This table shows some ways to address ongoing problems with the technology.

The incident serves as a reminder for shoppers to know their rights if faced with similar situations. Reporting errors promptly can help clear records fast.

What do you think about AI in stores? Share your thoughts in the comments below or pass this story along to raise awareness.